Leia esse artigo no Linkedin: https://www.linkedin.com/pulse/geometria-do-significado-como-o-chatgpt-transforma-palavras-sanchez-tjrif/?trackingId=OiWd5mbYQBOumr4vIbvxTw%3D%3D

SPOILER: 2026 I'm back in the classroom teaching Deep AI.

"ChatGPT doesn't understand the world with emotion—it understands it with geometry."

In recent months, many people have been trying to understand as A language model like ChatGPT "understands" what you say. And the truth is that it... doesn't understand how weBut you understand — in his own mathematical way.

What for us is intuition and context, for him is geometry and probability.

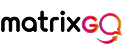

Words have ceased to be text.

Before generative artificial intelligence, words were just symbols. With the embeddingsthey became points in a mathematical spaceEach word is a vector — a sequence of numbers — and words with similar meanings are grouped together. next from each other in this space.

Imagine an invisible map where:

- “Michael”, “Jordan”, and “Basketball” form an island called Sport;

- "Quark," "Neutrino," and "Boson" form the archipelago of Physical;

- "Paris," "Eiffel," and "Louvre" inhabit the continent of Culture and Tourism.

This is the semantic space from ChatGPT — the territory where the meaning of words is literally a matter of geometric position.

Distance is meaningful

Within this space, the distance between two vectors It measures how similar two concepts are. The calculation is done with the similarity of the cosine, a function that measures the angle between two vectors.

If the angle is small, the words are similar. If it is large, they are far apart. If they point in opposite directions, they are opposite in meaning.

For example:

- "King" and "Queen" → 0.92 (next)

- “King” and “Throne” → 0.88 (strongly correlated)

- "King" and "Banana" → 0.04 (no relation)

- "Good" and "Bad" → -0.70 (direct opposition)

In summary: Semantics has become trigonometry.

Directions also have meaning.

But what's truly fascinating is that ChatGPT doesn't just measure distances — it Learn directions.

The difference between two vectors represents a conceptual relationshipAnd that relationship is linear and consistent throughout the entire space.

For example:

E(“king”)−E(“man”)+E(“woman”)≈E(“queen”)E(“king”) – E(“man”) + E(“woman”) \approx E(“queen”)E(“king”)−E(“man”)+E(“woman”)≈E(“queen”)

This same vector (the direction “male → female”) can be applied to “uncle/aunt”, “actor/actress”, “prince/princess”.

This is extraordinary: the model discovers that relationships of gender, time, polarity, or intensity are linear movements in the space of meaningInstead of memorizing phrases, he Learn about transformation vectors. — the fundamental axes of human language.

Space bends: learning in action.

During training, the model reads billions of texts and adjusts the position of each word. With each error, the vectors are moved slightly towards a more coherent configuration. It's as if the AI is folding space until the map of the words is correct. mirror the map of human concepts.

This continuous deformation creates curvatures: high-density regions (common concepts) and sparse areas (rare concepts). Over time, this space becomes a true topology of thought.

Geometry + Probability = Language

But geometry alone is static. What gives movement to thought is... probability.

For each sentence, the model calculates a probability distribution about which word should come next. These probabilities are generated from the geometry of the space and the direction of the context. Words closer to the vector of thought have a greater chance of appearing.

In simple terms:

ChatGPT "speaks" by sliding between nearby points in the idea space.

And the richer the context, the more precise the direction of the movement.

What does all this mean?

The "understanding" of ChatGPT is a phenomenon. geometric-probabilisticEach word is a point. Each sentence is a trajectory. Each conversation is a... network of paths in the space of meaning.

He has no consciousness, but he possesses consistencyHe doesn't feel it, but maps relationships with a precision bordering on cognitive. His reasoning is mathematical, but the effect is human.

The mind as geometry

We can say that:

- THE geometry It provides the meaning (the distances between ideas);

- THE probability It provides the reasoning (the choice of the next idea);

- And the apprenticeship It provides coherence (the curvature that adjusts the space).

Ultimately, ChatGPT doesn't understand with emotion—it understands with geometry. It thinks in terms of distances, speaks in terms of probabilities, and learns by distorting the very space of meaning.

Conclusion

Generative artificial intelligence is the first system in history to compressing human knowledge into a coherent vector spaceA space where every idea has coordinates, every relationship has direction, and every meaning is a geometric shape.

When you ask ChatGPT a question, it doesn't search for answers: it navigate through the space of meaning — and what you read is the path of that journey.

🧭 The geometry of meaning It is the new language of the artificial mind. And perhaps, on some level, it is also the invisible language of our own mind.

CEO | Leading the AgenticAI Revolution for Enterprise

October 19, 2025