MATRIX GO in the MEDIA

Original article at: IG: https://tecnologia.ig.com.br/dicas/2025-11-07/como-identificar-videos-criados-por-inteligencia-artificial.html

Amid the approach of a new election year in Brazil and the growing spread of manipulated videos On social media, experts warn that content generated by Artificial Intelligence (AI) These videos are becoming increasingly realistic and difficult to identify with the naked eye. deepfakesThey can influence public opinion and spread... misinformation and even interfere in political and judicial decisions.

An AI-generated video is not captured by cameras, but produced by... algorithms known as generative models. “These systems learn to create scenes, people, and sounds from text descriptions or still images.”, he explains to iG Portal Dhigo Correa, guest professor of the MBA in Information, Technology and Innovation at UFSCar.

According to him, the difference is that, although they seem real, these videosThey are completely synthetic."Even so, the most advanced systems have difficulty reproducing this in a natural way."the three-dimensional movement and the coherence of the scenery”.

To Nicola Sanchez, CEO of Matrix GoThe principle is similar.Instead of cameras, actors, and physical sets, AI uses textual, audio, or visual data to generate each frame. This allows for the production of completely synthetic and realistic content, based solely on descriptions.”, he comments in an interview with iG.

How can the public test the veracity?

Despite the high level of realism, there are noticeable clues.Incorrect body movements, hands passing through objects, or shadows that don't follow the light correctly are clear signs.“,” says Correa. He also cites expressions “mechanics"such as smiles that don't affect the eyes, or blinks that are too synchronized."

Sanchez adds that distortions In contrasting shadows and very smooth transitions are also indicators of flaws in objects.In terms of sound, excessively clean voices, lacking natural breath control or variation in intonation, create a feeling of uniformity and... artificiality "He states."

Voice cloning is another challenge.Today it's possible to generate fake human voices with just a few seconds of recording, imitating the tone and accent of any person.“,” explains Correa. According to him, AI-generated audio usually has “Constant volume, artificial pauses, and a lack of genuine emotion.”.

Sanchez adds that the public can perceive “excessively uniform pauses and absence of small, natural speech flaws."These details are, for now, the main difference between the real and the..." synthetic.

Correa recommends the “The three-perspective method":

- Technical perspective – Use tools like InVID to extract frames and perform reverse image searches;

- Logical perspective – assess whether the content makes sense, observing shadows, proportions, and coherence;

- Contextual perspective – check if news outlets or fact-checking agencies have published the same information.

Sanchez reinforces the importance of seeking out the original source.Be wary of videos that look perfect but lack verifiable documentation."He states."

Tools for verifying authenticity

Among the free resources that help verify videos are... Invid & WeVerify, used by journalists to extract frames and metadata from videos; the Verify, from the Content Authenticity Initiative, which shows originating digital signatures; and the Google News Initiative (GNI), which offers training and guides on fact-checking.

Sanchez also cites specialized platforms such as Reality Defender and Hive Moderation, which use pixel patterns and metadata to detect inconsistencies.

With technological advancements, distinguishing the real from the fake will become an increasingly greater challenge.The trend is for this distinction to depend more on automated authentication systems than on the human eye.“says Sanchez.

Correa cites tools such as UNITE, developed by Google and the University of California, and the L3DE, which analyze lighting, temporal coherence, and the laws of physics in videos.The human eye may not perceive it, but machines will still be able to identify the flaws in other machines."He states."

The risks and Brazilian legislation

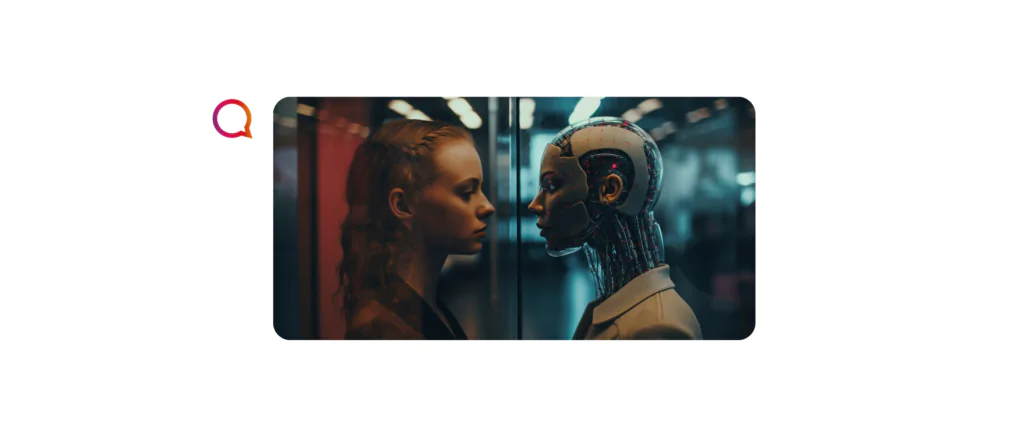

Experts emphasize that sharing fake videos can have serious consequences.This content can be used for defame people, apply scams and even interfere in elections“Correa warns.

In Brazil, the Superior Electoral Court (TSE) already prohibits the use of fake videos or manipulated AI in campaigns and requires visible warnings on artificially generated content. Projects such as PL 2.630/2020, known as “Fake News Bill”, and Bill 146/2024, which proposes more severe penalties for the use of deepfakes for illicit purposes.

Sanchez recalls that the Brazilian Internet Bill of Rights and the General Data Protection Law (LGPD) They already allow for civil and criminal liability in cases of misuse of AI.

As the use of AI advances, the debate about transparency and digital accountability gains momentum. Identifying fake videos is now a matter of... information security And, during election periods, it is a determining factor in preserving the integrity of the vote and public trust in institutions.